Currently, most computer programs for the game of Go are based on Monte-Carlo procedures. The top bots have reached amateur dan level, as demonstrated on the KGS internet server in many games against a huge diversity of human players. When such a program makes its computations for a given go position, the result is not only a move proposal but also an evaluation, given in % = "percents of success". Here, a value of 50 % means an "almost balanced position" in the opinion of the bot, which may of course be wrong.

A value higher than 50 % means advantage for the side for which the move proposal was computed. Very important notice: These %-values should not be interpreted as direct winning probabilities. Instead, experience shows that a value of 70+ % typically means an almost sure win (like a probability of 95 % or even more). On the other hand, a value of 30- % means that the side to move will very likely lose (in clearly more than 70 % of the cases).

Why do the bots show these strange values and not "true winning chances"? The answer is easy: The %-values are not meant as information for human observers. They are only internal numbers to direct the search and the move decision. The future will show, if (commercial) programmers come up with more user-friendly evaluations.

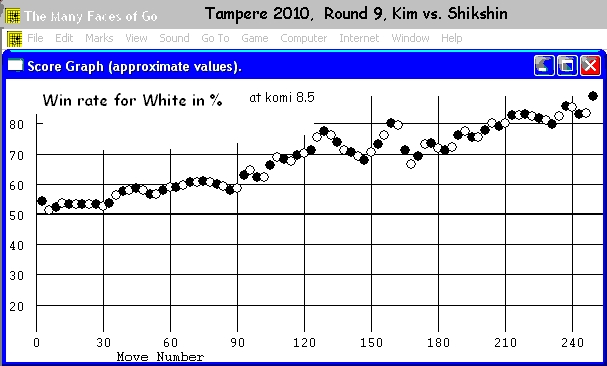

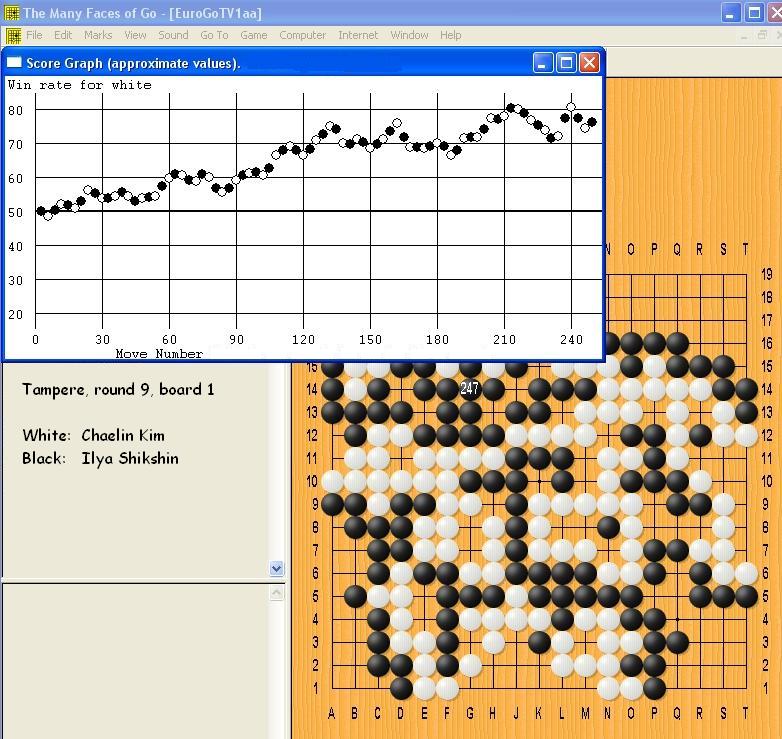

Many Faces of Go by David Fotland is a strong

commercial go bot with a nice user interface. Many Faces

has several features which can help to analyse a go game.

Here we present an example for the case that the sgf-file

of a complete game is given: Many Faces can generate a

"curve" consisting of the "success %-values" for player White

for all positions on the game.

The computing process for this curve is rather time-consuming.

Therefore, the %-values are computed only for every third

position. For a game with 250 moves the total computation

takes about 30 seconds on a normal PC.

In July/August 2010, the European Go Congress took place

in Tampere (Finland). In the main tournament (10 rounds,

459 players from all over the world), at the end Ilya

Shikshin (7-dan, from Russia) won with 8 points, ahead

of three other players (Artem Kachanovsky (Ukraine),

Chaelim Kim, and JungHyeop Kim (both from South

Korea)) with the same score.

In round 9, board 1 saw Shikshin (with Black) and Chaelim

Kim (White) in contest. Kim was able to achieve a clear victory,

although at the end his nominal plus was only 2.5 points.

Here you can download the

sgf of

Kim vs Shikshin.

The following diagram shows the % of success for White, as computed by Many Faces.

My interpretation: Many Faces believes that White had a

clear edge already early in the game. From move 125 on

his % score was always near to or above 70 %.

A warning: Of course one has to be careful when a 1-dan bot

is used to evaluate a game between 7-dan players. And I know

that my interpretation may be wrong. But, the relatively

smooth flow of the game (no really violent fights) is an

indication that Many Faces is rather reliable in its evaluations.

Rule of thumb:

The more peaceful a go game

the more reliable are the evaluations of Monte-Carlo bots.

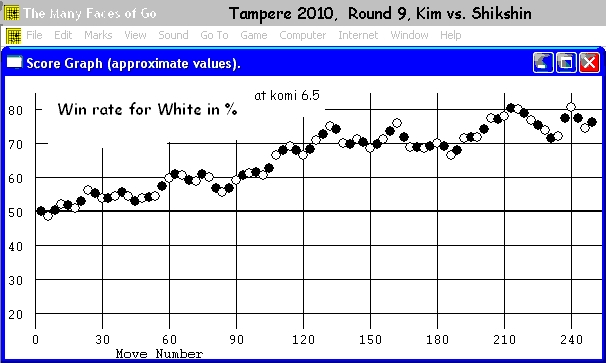

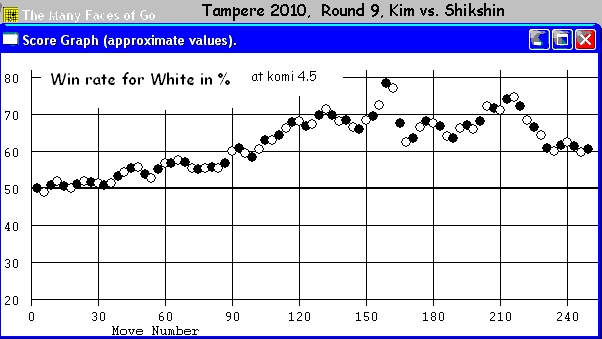

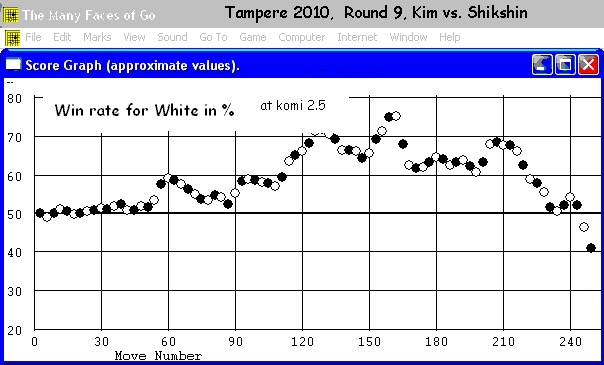

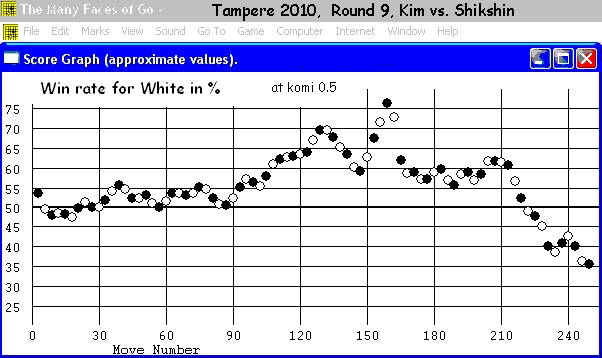

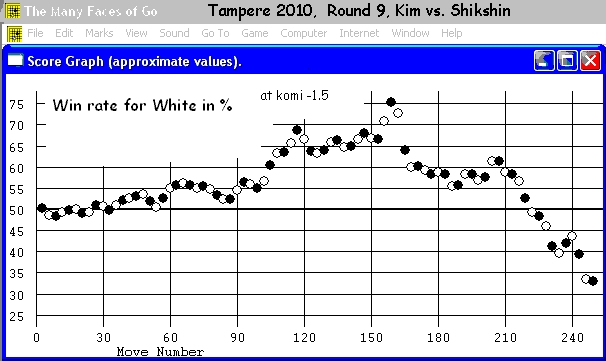

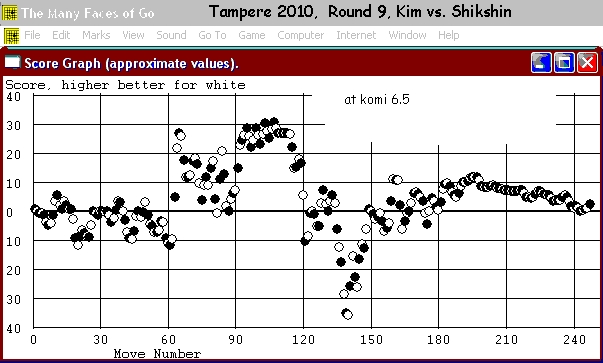

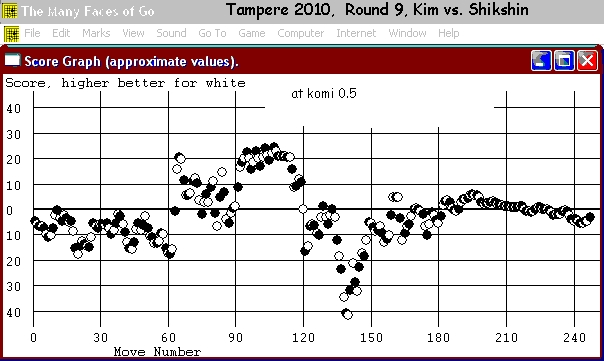

One nice thing that can be done with "Many Faces of Go" is to modify komi - and to ask the bot for its %-values for the new value of komi. I did this for the Kim-Shikshin game with several komi settings: 8.5, 6.5 (the true value), 4.5, 2.5, 0.5, -1.5 . The resulting diagrams can be seen below.

For 8.5, 6.5, 4.5 the curves stay clearly above 50 %. For the smaller values (2.5, 0.5, -1.5) the curves finish clearly below 50 %. That is not a surprise, as the game ended with a 2.5-point victory for White (at komi 6.5). The fact that even these curves are clearly above 50 % for a very long time (until move 220+), indicates that White had a clearer advantage in between, but savely "rolled home" with a small margin.